Threatening bills

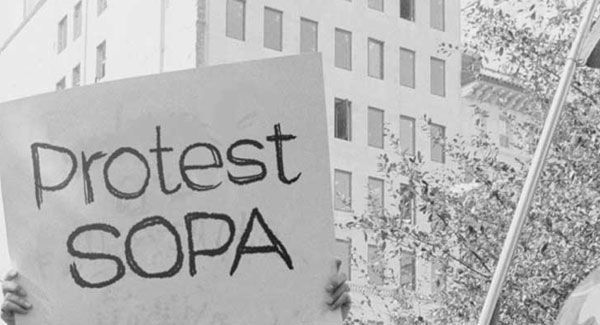

Yesterday was a crucial day in the history of the Internet. What was so particular about January 18, you'll ask? We'll tell you: the fact that the US Congress planned to carry over for consideration a bill that would affect the whole construction of the world wide web - the Stop Online Piracy Act or SOPA.

The bill was proposed at the end of October and represented a serious ground for everybody's consideration, not only on the part of the authorities. The general sense of SOPA is the following: if adopted, the bill would allow the US government rule the whole world of information technology (no matter it sounds quite dogmatic - that's true). So, that's how it should work: if a special governmental body receives a complaint about a website's infringement of intellectual property rights, it can ban this website without any further investigation. Please note that this governmental body's competence (according to this bill) is not limited to the United States. That is why we say it will let the US rule the whole Internet. And that is one of the reasons why this bill arose so much concern among website owners and the general public.

SOPA's 'younger brother' is PIPA, or the Protect Intellectual Property Act. This bill seems to be a bit more liberal, but the sense is just the same: to limit the free knowledge sharing via the Internet. This fact couldn't be left aside by activists fighting for the Internet freedom, but this case was quite special thanks to another fact: the protest was supported by almost all of the largest websites, including Wikipedia, Facebook and even Google. Most of them decided to participate in a so-called SOPA blackout, which is just to replace some of the web pages by something looking like this:

WordPress SOPA blackout initiative

WordPress SOPA blackout initiative

Google, the enormous corporation, which is mainly famous for its search engine, couldn't afford such a radical step. It revealed, however, some tips of how to avoid crashing your Google ranking when something is wrong with your website. These tips can also come in handy when you decide to perform server maintenance or any other task that can require temporary changing of the content. See below to find out how :)

Easy steps to protect your ranking

So, if you need to replace some of your pages with some content you don't want the search engine bot to crawl and index, read the following instructions.

First of all, please make sure you've made it clear enough that Googlebot shouldn't see the content you place on the pages, which requires from you to return a 503 header for all the pages that have been replaced. This action will also help avoid indexing duplicated content, which is otherwise sure to affect your Google rating significantly. You should keep in mind, however, that if you've inflicted a sudden increase in 503 pages on your website, Googlebot will slow down crawling on your website until the problem is solved. This is said, however, not to affect the long-term search results.

You should also be extremely careful with your robots.txt file. Given it is the first file for the bot to find on your website: in this file you must specify all restrictions for Googlebot to follow. For instance, you may want to hide from the bot such pages as the login or registration page. And exactly for this purpose you should add a special direction for the bot to the robots.txt file. So, in order to hide the pages, edit your robots.txt file. Note that if you make the whole robots.txt return a 503 on request, it will block crawling around the website until the bot notices some other status, which must be acceptable: 200 or 404. So if you're switching off only a portion of your website, make sure the robots.txt status is different from 503. Do not try stopping the bot from crawling around the website by disallowing the whole resource in this file: this will make the time needed for recovery extremely longer.

If you notice crawling errors in log files, do not worry. Don't forget that Googlebot normally notices all the minor changes in the website's performance, so it will record errorneous steps as well. Make sure, however, that these errors are eliminated after you made everything as it used to be before the chages. Do not to change too many things unless it is needed. Don't change the DNS settings, or robot.txt file contents, and do not even think about changing the crawl rate in the admin center of Google's webmaster tools for the time of maintenance or whatever.

We hope, these hints will prove useful for you when you get down to another cycle of the endless task - improving your own bite of the Internet.